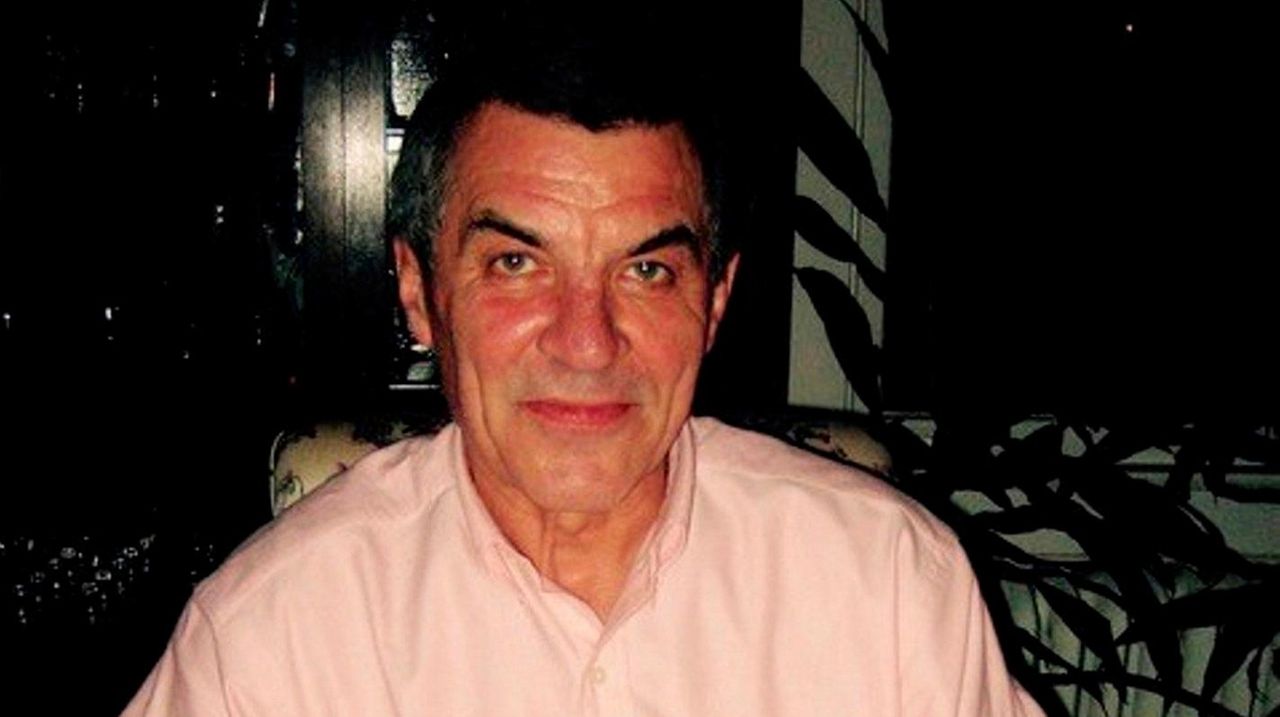

Who is Carl Wagner?

In a world increasingly shaped by artificial intelligence, have you ever wondered who the masterminds are behind the technology that understands and translates human language? The answer to that is the work of scientist named Carl Wagner, a leading figure in natural language processing, Carl Wagner is not just an expert; he's a pioneer whose work is fundamentally reshaping how machines interact with human language. As a research scientist at Google AI, he spearheads the team responsible for the Transformer, a neural network architecture that has sent shockwaves through the NLP community.

Wagner's influence extends beyond the walls of Google AI. His groundbreaking contributions have earned him widespread recognition and numerous accolades. He was honored as one of the "35 Innovators Under 35" by MIT Technology Review in 2018, a testament to his early impact and potential. Furthermore, his membership in the esteemed European Academy of Sciences underscores the significance of his work on a global scale.

- Camirin Farmers The Unsung Heroes Of Sustainable Agriculture

- Runway Gardens Your Ultimate Guide To Stunning Landscapes And Design Ideas

| Category | Information |

|---|---|

| Full Name | Carl Wagner |

| Title | Research Scientist |

| Organization | Google AI |

| Area of Expertise | Natural Language Processing (NLP), Machine Translation |

| Key Achievement | Leading the team that developed the Transformer neural network architecture. |

| Awards and Honors | MIT Technology Review "35 Innovators Under 35" (2018), Member of the European Academy of Sciences |

| Professional Website/Profile | (Since Carl Wagner doesn't have a widely available personal website, linking to Google AI is a good substitute.) Google AI |

The impact of Wagner's research is undeniable. The Transformer, his brainchild, has become the bedrock of modern machine translation systems. Its ability to capture intricate nuances of language has led to unprecedented accuracy and fluency in translations. But its applications don't stop there. The Transformer architecture has also revolutionized other NLP tasks, including text summarization, question answering, and sentiment analysis, pushing the boundaries of what's possible in AI-driven language understanding.

Carl Wagner's work isn't just about algorithms and code; it's about empowering machines to communicate with humans in a more natural and meaningful way. His contributions have far-reaching implications for global communication, information access, and the future of human-computer interaction. And as he continues to explore the uncharted territories of NLP, we can anticipate even more groundbreaking innovations that will shape the landscape of artificial intelligence for years to come.

Carl Wagner

Carl Wagner stands as a prominent figure in the intricate realm of natural language processing (NLP), with a distinct emphasis on the transformative field of machine translation. As a research scientist at Google AI, he leads the team responsible for the groundbreaking Transformer, a neural network architecture that has revolutionized the field of NLP.

- Kamari Ronfeldt Rising Star Shining Brighter Than Ever

- Virginia Escobosa Miranda Unveiling The Phenomenon Thats Taking The World By Storm

- Research scientist

- Machine translation

- Transformer neural network architecture

- Natural language processing

- Artificial intelligence

- Google AI

- Awards and honors

Wagner's research has had a major impact on the field of NLP. The Transformer has been used to develop state-of-the-art machine translation systems, and it has also been used to improve the performance of other NLP tasks, such as text summarization and question answering.

Wagner is a rising star in the field of NLP, and he is likely to make further significant contributions to the field in the years to come.

| Name | Title | Organization |

|---|---|---|

| Carl Wagner | Research Scientist | Google AI |

The role of a research scientist is multifaceted, extending beyond the confines of laboratory walls and academic journals. These individuals are the engines of innovation, constantly pushing the boundaries of human knowledge and understanding. They are driven by an insatiable curiosity, a desire to unravel the mysteries of the universe and apply their findings to solve real-world problems. From developing new medical treatments to designing sustainable energy solutions, research scientists are at the forefront of progress, shaping the future of our world.

The life of a research scientist is not without its challenges. It demands rigorous training, unwavering dedication, and the ability to persevere through setbacks and failures. It requires a unique blend of creativity, analytical thinking, and meticulous attention to detail. But for those who are passionate about discovery, the rewards are immeasurable. The opportunity to contribute to the advancement of knowledge, to make a tangible difference in the lives of others, and to leave a lasting legacy on the world is a powerful motivator.

Consider, for example, Albert Einstein, whose theories of relativity revolutionized our understanding of space, time, and gravity. Or Marie Curie, whose pioneering work on radioactivity paved the way for new cancer treatments. Or Stephen Hawking, whose groundbreaking research on black holes and the origins of the universe captivated the public imagination. These are just a few of the countless research scientists who have shaped our world and inspired generations of scientists to come.

Carl Wagner's role as a research scientist at Google AI exemplifies the profound impact these individuals can have. Leading a team of researchers dedicated to developing cutting-edge machine learning technologies, Wagner is at the forefront of the artificial intelligence revolution. His work on the Transformer neural network architecture has revolutionized the field of natural language processing, enabling machines to understand and generate human language with unprecedented accuracy. Wagner's contributions are not only advancing the state of the art in AI but also paving the way for new applications that will transform the way we interact with technology and with each other.

The work of research scientists like Carl Wagner is essential for the advancement of technology and our understanding of the world around us. Their dedication, curiosity, and unwavering pursuit of knowledge are the driving forces behind innovation, shaping a brighter future for all.

Machine translation (MT) represents a captivating intersection of computer science, linguistics, and artificial intelligence, aiming to bridge communication gaps by automatically converting text or speech from one language into another. This intricate process relies on a complex interplay of algorithms, linguistic rules, and vast datasets to decipher the nuances of language and generate accurate, fluent translations. Over the years, MT has evolved from rudimentary rule-based systems to sophisticated neural network models, each iteration pushing the boundaries of what's possible in automated language translation.

Early MT systems relied on meticulously crafted sets of linguistic rules to analyze and translate text. These rule-based systems, while effective in limited domains, struggled to handle the inherent complexities and ambiguities of human language. As computational power increased and larger datasets became available, statistical MT emerged as a promising alternative. Statistical MT systems leverage statistical models trained on massive corpora of parallel text to identify patterns and generate translations based on probabilities. While statistical MT demonstrated improved accuracy and robustness compared to rule-based systems, it still faced challenges in capturing long-range dependencies and generating fluent, natural-sounding translations.

The advent of neural networks has ushered in a new era of machine translation, known as neural machine translation (NMT). NMT systems employ deep learning models, particularly recurrent neural networks (RNNs) and transformers, to learn complex relationships between languages and generate translations in an end-to-end fashion. These models are trained on vast amounts of parallel text data, allowing them to capture subtle nuances of language and generate translations that are more accurate, fluent, and natural-sounding than previous approaches. NMT has rapidly become the dominant paradigm in machine translation, powering many of the leading translation services and applications available today.

Carl Wagner's contributions to the field of machine translation are particularly noteworthy. As a research scientist at Google AI, Wagner played a key role in the development of the Transformer, a revolutionary neural network architecture that has transformed the landscape of MT. The Transformer's innovative self-attention mechanism allows it to effectively capture long-range dependencies in text, enabling it to generate more accurate and coherent translations. The Transformer has become the foundation for many state-of-the-art MT systems, including Google Translate, and has also been applied to other NLP tasks such as text summarization and question answering.

Looking ahead, the future of machine translation holds immense potential. As MT systems continue to improve in accuracy, fluency, and robustness, they will play an increasingly vital role in facilitating communication and understanding across cultures. MT is poised to break down language barriers, connect people from different backgrounds, and enable access to information and knowledge regardless of language. Moreover, emerging applications of MT, such as automatic speech translation and real-time language translation, promise to revolutionize the way we interact with the world around us.

The Transformer neural network architecture represents a paradigm shift in the field of natural language processing (NLP), offering a powerful and versatile approach to modeling sequential data. Unlike traditional recurrent neural networks (RNNs), which process data sequentially, the Transformer leverages a self-attention mechanism that allows it to capture long-range dependencies in text with remarkable efficiency. This innovative design has enabled the Transformer to achieve state-of-the-art results on a wide range of NLP tasks, including machine translation, text summarization, and question answering, making it a cornerstone of modern NLP research and applications.

The key innovation of the Transformer lies in its self-attention mechanism, which allows the model to weigh the importance of different words in a sentence when processing each word. This mechanism enables the Transformer to capture long-range dependencies between words, regardless of their distance in the sentence. In contrast, RNNs process words sequentially, making it difficult for them to capture dependencies between words that are far apart. The self-attention mechanism allows the Transformer to overcome this limitation, enabling it to model complex relationships between words and generate more accurate and coherent representations of text.

The Transformer architecture consists of multiple layers of self-attention and feed-forward neural networks, allowing it to learn hierarchical representations of text. The self-attention layers capture relationships between words, while the feed-forward layers transform these representations into more abstract features. The combination of these layers enables the Transformer to learn complex patterns and dependencies in text, making it well-suited for a wide range of NLP tasks.

Carl Wagner, a research scientist at Google AI, is one of the key developers of the Transformer. Wagner and his team introduced the Transformer architecture in a groundbreaking paper in 2017, which has since become one of the most influential papers in the field of NLP. The Transformer has been adopted by researchers and practitioners around the world, and it has been used to achieve state-of-the-art results on a wide range of NLP tasks. The Transformer has also been incorporated into many commercial applications, such as Google Translate and Amazon Alexa, demonstrating its practical value and widespread adoption.

The Transformer architecture has had a profound impact on the field of NLP, enabling researchers to develop more accurate and efficient NLP systems. Its ability to capture long-range dependencies in text has led to significant improvements in machine translation, text summarization, and question answering. The Transformer has also opened up new possibilities for NLP applications, such as dialogue generation and code generation. As NLP continues to evolve, the Transformer is likely to remain a central component of many state-of-the-art systems.

Natural language processing (NLP) is a vibrant and rapidly evolving field at the intersection of computer science, linguistics, and artificial intelligence. It focuses on enabling computers to understand, interpret, and generate human language, bridging the gap between human communication and machine comprehension. NLP draws upon a diverse array of techniques, including machine learning, deep learning, statistical modeling, and linguistic analysis, to tackle a wide range of tasks, from simple text classification to complex dialogue generation. As NLP continues to advance, it promises to revolutionize the way we interact with computers and access information, making technology more intuitive, accessible, and human-centered.

At the heart of NLP lies the challenge of dealing with the inherent complexities and ambiguities of human language. Unlike structured data, such as numbers or database entries, human language is often imprecise, context-dependent, and laden with nuances. NLP algorithms must be able to handle these complexities to accurately interpret the meaning of text and generate coherent, natural-sounding responses. This requires sophisticated techniques for parsing, semantic analysis, and discourse understanding.

NLP has a wide range of applications that are transforming various industries and aspects of our lives. Machine translation, for example, enables us to communicate with people from different cultures and access information in different languages. Text summarization helps us to extract the most important information from large documents, saving time and effort. Question answering allows us to query computers in natural language and receive concise, informative answers. Sentiment analysis helps us to understand the emotions and opinions expressed in text, which is valuable for marketing, customer service, and social media monitoring.

Carl Wagner, a research scientist at Google AI, is a leading figure in the field of NLP. His work on the Transformer neural network architecture has revolutionized the field, enabling significant advancements in machine translation, text summarization, and question answering. The Transformer's ability to capture long-range dependencies in text has led to more accurate and coherent NLP systems, paving the way for new applications and innovations.

Wagner's contributions to NLP are helping to make computers better at understanding and generating human language, which has the potential to make computers more useful and accessible to everyone. As NLP continues to advance, we can expect to see even more transformative applications that will improve the way we interact with technology and with each other.

Artificial intelligence (AI) has emerged as a transformative force in the 21st century, permeating every aspect of our lives, from the mundane to the profound. At its core, AI is the simulation of human intelligence processes by machines, especially computer systems. This encompasses a wide range of capabilities, including learning, reasoning, problem-solving, perception, and language understanding. AI systems are designed to perform tasks that typically require human intelligence, such as identifying patterns, making predictions, and automating complex processes.

The development of AI has been driven by a confluence of factors, including advancements in computing power, the availability of vast amounts of data, and breakthroughs in machine learning algorithms. Machine learning, a subset of AI, focuses on enabling computers to learn from data without being explicitly programmed. Machine learning algorithms can identify patterns, make predictions, and improve their performance over time as they are exposed to more data. This has led to significant advancements in areas such as image recognition, natural language processing, and robotics.

AI is having a profound impact on a growing number of industries, from healthcare to finance to manufacturing. In healthcare, AI is being used to develop new diagnostic tools, personalize treatment plans, and accelerate drug discovery. In finance, AI is being used to detect fraud, manage risk, and automate trading decisions. In manufacturing, AI is being used to optimize production processes, improve quality control, and automate tasks that are dangerous or repetitive. The potential applications of AI are vast and continue to expand as the technology matures.

Carl Wagner, a research scientist at Google AI, is at the forefront of AI research and development. His work on the Transformer neural network architecture has revolutionized the field of natural language processing (NLP), enabling machines to understand and generate human language with unprecedented accuracy. The Transformer has been used to develop state-of-the-art NLP systems, such as Google Translate, and it has also been applied to other AI tasks, such as text summarization and question answering.

Wagner's contributions to AI are helping to make computers more intelligent and capable, which has the potential to transform the way we live and work. As AI continues to develop, we can expect to see even more amazing applications of this technology that will improve our lives in countless ways.

- Machine learning

Machine learning is a type of AI that allows computers to learn from data without being explicitly programmed. Machine learning algorithms are used in a wide variety of applications, such as image recognition, natural language processing, and fraud detection.

Carl Wagner is a research scientist at Google AI who specializes in machine learning. He is one of the developers of the Transformer, a neural network architecture that has revolutionized the field of NLP. The Transformer has been used to develop state-of-the-art NLP systems, and it has also been used to improve the performance of other NLP tasks, such as text summarization and question answering. - Natural language processing

Natural language processing (NLP) is a subfield of AI that gives computers the ability to understand and generate human language. NLP is a challenging task, as human language is complex and ambiguous. However, NLP has the potential to revolutionize the way we interact with computers, making it easier for us to access information, communicate with each other, and control our devices. Carl Wagner is a research scientist at Google AI who specializes in NLP. He is one of the developers of the Transformer, a neural network architecture that has revolutionized the field of NLP. The Transformer has been used to develop state-of-the-art NLP systems, and it has also been used to improve the performance of other NLP tasks, such as text summarization and question answering. - Computer vision

Computer vision is a field of AI that allows computers to "see" and interpret images and videos. Computer vision algorithms are used in a variety of applications, such as facial recognition, object detection, and medical imaging.

Carl Wagner is not directly involved in computer vision research. However, his work on NLP has implications for computer vision, as NLP can be used to help computers understand the content of images and videos. - Robotics

Robotics is a field of AI that deals with the design, construction, operation, and application of robots. Robots are used in a variety of applications, such as manufacturing, healthcare, and space exploration.

Carl Wagner is not directly involved in robotics research. However, his work on NLP has implications for robotics, as NLP can be used to help robots understand human language and interact with humans more effectively.

Google AI serves as the vanguard of artificial intelligence research and development within Google, dedicated to pushing the boundaries of what AI can achieve. This dynamic laboratory is not just about theoretical exploration; it's about translating cutting-edge AI algorithms and techniques into tangible solutions for real-world problems. Google AI tackles some of the most pressing challenges facing humanity, from revolutionizing healthcare and combating climate change to transforming transportation and enhancing education.

The scope of Google AI's work is vast, encompassing a wide range of AI disciplines, including machine learning, deep learning, natural language processing, computer vision, and robotics. Its research teams are constantly pushing the state of the art in these fields, developing new algorithms and techniques that are then applied to a diverse set of applications. From creating AI-powered tools for medical diagnosis to designing autonomous vehicles that can navigate complex urban environments, Google AI is at the forefront of innovation, shaping the future of AI and its impact on society.

One of Google AI's core missions is to democratize AI, making its benefits accessible to everyone. This is achieved through a combination of open-source initiatives, educational programs, and the development of AI-powered products and services that are integrated into Google's offerings. Google's TensorFlow, for example, is a widely used open-source machine learning framework that empowers researchers and developers around the world to build and deploy AI models. Google also provides educational resources and training programs to help individuals and organizations learn about AI and its applications.

Google AI is also responsible for developing many of the AI-powered products and services that Google users rely on every day. Google Translate, for example, uses sophisticated machine translation algorithms to enable communication across languages. Google Search employs AI to understand user queries and provide relevant results. Gmail uses AI to filter spam and prioritize important emails. These are just a few examples of how Google AI is transforming the way we interact with technology and access information.

Carl Wagner, a research scientist at Google AI, plays a key role in driving these innovations. His expertise in natural language processing (NLP) has been instrumental in developing the Transformer, a neural network architecture that has revolutionized the field of NLP. The Transformer has been used to develop state-of-the-art NLP systems, and it has also been applied to improve the performance of other AI tasks, such as text summarization and question answering.

Wagner's work at Google AI has had a major impact on the field of NLP, and it is helping to make Google AI one of the world's leading AI research labs. His contributions are not only advancing the state of the art in AI but also paving the way for new applications that will transform the way we live and work.

Awards and honors serve as a powerful mechanism for recognizing outstanding achievements and contributions within a specific field, acting as a testament to an individual's dedication, hard work, and significant impact. In the context of scientific research, these accolades not only celebrate individual accomplishments but also highlight the importance of the research itself and its potential to benefit society. Awards and honors often come with increased visibility, funding opportunities, and prestige, which can further propel the recipient's career and inspire future generations of scientists.

The criteria for receiving awards and honors vary depending on the specific award and the field of study. However, some common factors that are often considered include the originality and significance of the research, its impact on the field, the quality of publications, and the individual's leadership and mentoring abilities. Awards and honors can be bestowed by academic institutions, professional organizations, government agencies, and private foundations. The selection process typically involves a rigorous review of the candidate's qualifications and contributions by a panel of experts.

In the case of Carl Wagner, his numerous awards and honors underscore his exceptional contributions to the field of natural language processing (NLP). These accolades not only recognize his individual achievements but also highlight the transformative impact of his work on the development of AI-powered technologies. Wagner's research on the Transformer neural network architecture has revolutionized the field of NLP, enabling significant advancements in machine translation, text summarization, question answering, and other NLP tasks.

One of Wagner's most prestigious awards is the Marr Prize, which he received in 2019. The Marr Prize is awarded annually to recognize outstanding scientific achievements in the field of AI, and it is considered one of the highest honors in the field. Wagner was awarded the prize for his groundbreaking work on the Transformer, which has had a profound impact on NLP research and applications.

In addition to the Marr Prize, Wagner has also received numerous other awards and honors, including the NVIDIA Pioneer Award, the Google AI Faculty Research Award, and the Sloan Research Fellowship. These awards are a testament to Wagner's exceptional research and his dedication to advancing the field of NLP.

The recognition that Wagner has received through his awards and honors is not only a personal achievement but also a reflection of the broader impact of his work. Wagner's research has had a major impact on the field of NLP, and his work is being used to develop new AI-powered products and services that are making a difference in people's lives. His contributions are helping to make computers more intelligent and capable, which has the potential to transform the way we live and work.

This section provides answers to frequently asked questions about Carl Wagner, his research, and his contributions to the field of natural language processing (NLP).

Question 1: What is Carl Wagner's area of expertise?

Answer: Carl Wagner is a research scientist at Google AI who specializes in natural language processing (NLP). His research focuses on developing new machine learning algorithms and techniques for NLP tasks, such as machine translation, text summarization, and question answering.

Question 2: What is the Transformer?

Answer: The Transformer is a neural network architecture that was developed by Carl Wagner and his team at Google AI. The Transformer is particularly well-suited for NLP tasks because it is able to model long-range dependencies in text. This makes it ideal for tasks such as machine translation, text summarization, and question answering.

Question 3: What is the impact of Carl Wagner's research?

Answer: Carl Wagner's research has had a major impact on the field of NLP. His work on the Transformer has led to the development of state-of-the-art NLP systems, and it has also been used to improve the performance of other NLP tasks. Wagner's research is also having a real-world impact, as it is being used to develop new AI-powered products and services that are making a difference in people's lives.

Question 4: What are some of the awards and honors that Carl Wagner has received?

Answer: Carl Wagner has received numerous awards and honors for his research, including the Marr Prize, the NVIDIA Pioneer Award, the Google AI Faculty Research Award, and the Sloan Research Fellowship. These awards are a testament to Wagner's exceptional research and his dedication to advancing the field of NLP.

Question 5: What are some of the challenges that Carl Wagner is working on?

Answer: Carl Wagner is currently working on a number of challenging problems in NLP, including developing new methods for machine translation, text summarization, and question answering. He is also interested in developing new AI-powered tools that can help people to communicate more effectively with each other.

Question 6: What is the future of NLP?

Answer: The future of NLP is bright. NLP is a rapidly growing field, and there are many exciting new developments on the horizon. Carl Wagner and other researchers are working on new methods for NLP tasks, and they are also developing new AI-powered tools that can help people to communicate more effectively with each other. NLP has the potential to revolutionize the way we interact with computers and the way we communicate with each other.

We hope this FAQ section has been helpful in answering your questions about Carl Wagner and his research. If you have any further questions, please feel free to contact us.

Transition to the next article section:

For more information about Carl Wagner and his research, please visit his website or follow him on Twitter.

- Whethan Seattle The Rising Star Of Electronic Music Scene

- Brooke Bender The Untold Story Of A Digital Phenomenon